Apple Takes Its Bite

They’ve been taking their time with AI, but at WWDC this week, Apple laid out their vision of the future.

This battle station is fully operational.

We don’t talk much about Apple and AI in this newsletter, mostly because Apple is taking its sweet time when it comes to AI, and it doesn’t make a lot of big waves.

Under the banner of “Apple Intelligence,” the company has rolled out pleasant-but-not-surprising features for iOS and MacOS in small batches over the last few years. Most of their machine learning and AI work has landed quietly and has been received well enough. When people think about AI taking jobs, ruining art, or destroying education, they imagine Sam Altman or Elon Musk, not Tim Cook.

Obviously that’s by design. OpenAI or Anthropic can ship strange, broken chat and image-generating tools with impossible names that do wild stuff; if Apple did that, it would look bad for them, and could ding their stock price. They’ve had some missteps, like their weird Genmoji maker (typical Reddit comment: “An image generator for babies”). But mostly, they’ve just played AI safe.

Want more of this?

The Aboard Newsletter from Paul Ford and Rich Ziade: Weekly insights, emerging trends, and tips on how to navigate the world of AI, software, and your career. Every week, totally free, right in your inbox.

Now it’s WWDC, the annual Worldwide Developers Conference, and Apple is releasing lots of nerdy stuff—a new version of iOS is coming, and there’s a new interface called “Liquid Glass” that’s pleasingly blobby. But one of their most interesting announcements is something they call “Foundation Models framework.”

A big thing Apple does that only programmers tend to notice is they release “frameworks”: Bundles of code that can be re-used across their operating systems by third parties. When they wanted to “go into” health in a big way, for example, they released the HealthKit framework. App developers can use that framework to access health information that iOS gathers, like heart rate on an Apple Watch, or miles cycled.

Apple keeps things so locked down that this is the only way developers can get this information—but it’s well-documented and works well, so that’s a good trade for many people. The app users get privacy assurances, because the app developers have to work within Apple’s guidelines. Developers code up the apps using the kits, Apple approves the apps, and they’re sold in the App Store where Apple gets a cut. Google does something similar with Android, but Apple is special. It controls everything with a silicon fist. There are many, many, many “kits,” and it has no problem saying “no.”

That’s what Apple has done with AI: It has created a closed framework for working with LLMs that integrates deeply with its operating system, and across its entire software development kit. This framework is totally under Apple’s control, but accessible to developers.

Their smaller AI model runs right on the iPhone, as part of iOS. It can chat with you, offer writing prompts, and it seems it can generate images. There’s also another LLM—a big one, much too large for a single phone—that runs on Apple’s servers. This means you can do lots of little AI tasks right in your hand, then call out for bigger tasks—and never, ever leave Apple’s world. No Claude, no OpenAI. Apple promises that their AI models are competitive; the big one is not as good as ChatGPT yet, but it’s solid.

Taking it further, all of this functionality is embedded right inside of the Apple-controlled (but open-sourced) programming language, Swift. If you’re an app developer, with a few lines of code, you can call a local AI model, or a bigger, remote one, and the resulting data can be used inside of your app. You never leave Swift—and more importantly, you never leave Apple. Which means that your users never leave Apple.

Reading through the full overview, you get a sense that the wild, expansionist era of AI is starting to end. Apple emphasizes that they train their models ethically, respecting all the rules of the internet and avoiding private user data. Here’s how they deal with images: “In addition to filtering for legal compliance, we filtered for data quality, including image-text alignment. After de-duplication, this process yielded over 10B high-quality image-text pairs.”

Of course, “ethically” doesn’t include investing in and supporting the data creators whose content they harvest, which would be actually, you know, ethical. “Ethics” here basically means “if there’s an unlocked bike, but it’s inside a fence, we don’t steal the bike.” That said, when it comes to AI in 2025, a large organization that simply follows norms and rules is an improvement.

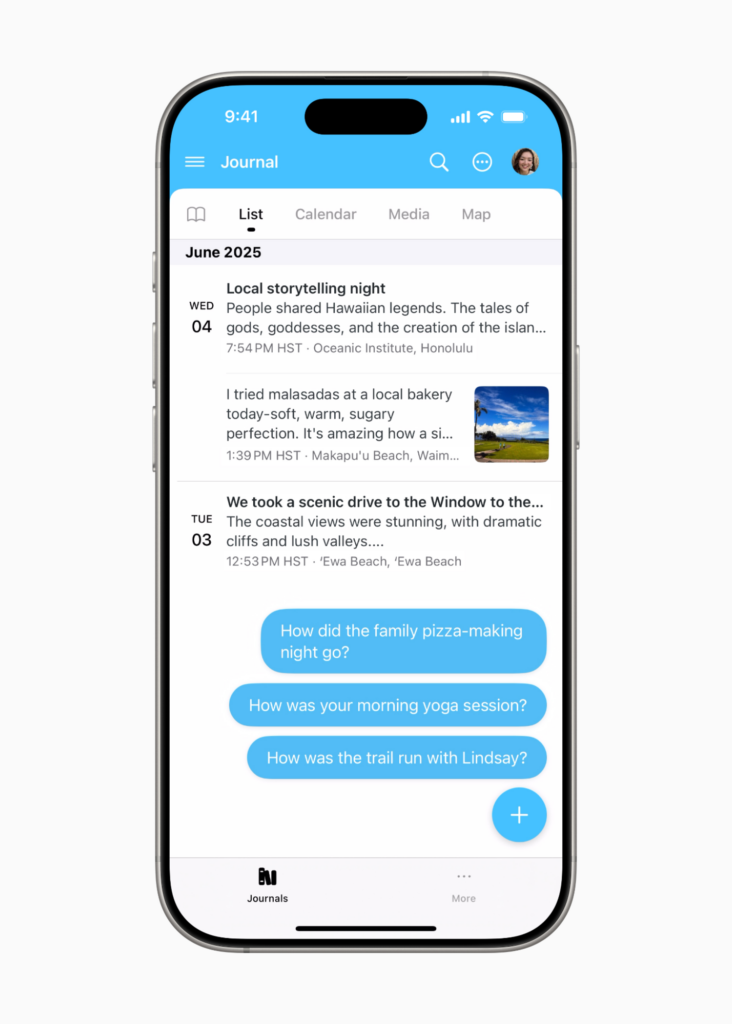

How will it actually work? The demo app is a journaling tool for Day One; the app asks you how your day went and prompts you to write in your journal. I’m sure people will experiment with this. I’d expect to see a bunch of AI therapists, or game characters who talk to you. But then I’d expect AI to start integrating with all the kits—often while preserving privacy, on your phone.

A health app might look at your jogs and suggest a different route with more points of interest. A chat app might highlight friends you haven’t talked to recently, and offer some prompts to restart the conversation. A calendaring app might help you organize your schedule. The operating system might look at your activities and suggest apps you might like to download. All roads will lead back to Apple, of course.

This will take time, and Apple has time. It won’t be like when OpenAI drops a new ChatGPT and everyone gets excited. It will keep improving, finding its way into more and more apps and functions. Apple will use it to make tens or hundreds of billions of dollars over the years, and most of it will show up as neat features all across their vast ecosystem, little by little. And as they go, so will Google and Microsoft. You’ll have to pay close attention, because these organizations don’t feast on drama as much as the AI companies do—and they’re moving things forward on their own schedule.