Desperately Seeking Software

A review of OpenAI’s latest big release, ChatGPT 5.

Chat, how would you rate yourself?

OpenAI recently launched a new version of ChatGPT—ChatGPT 5—and they held a launch livestream talking about what’s changed. I watched it. Everyone was young and the studio had nice light. It was a little hard to pay attention.

What I miss the most with the new world of software is that no one reviews anything with words. I grew up reading about software. Now you have to try everything, or watch videos of people trying things. That’s exhausting and expensive, whereas reading is cheap and fast—and you can skip to the pricing if you’re bored.

So I figured I’d write a short review of ChatGPT 5 to organize my thoughts. My review formulation for software is pretty simple. It’s: (1) What’s this? (2) Who’s it for? (3) What’s new? (4) Does it matter? (5) What’s missing? (6) What’s it cost? Let’s go.

What’s This?

OpenAI is a huge, innovative commercial company that, all of its drama aside, offers a broad range of services that let people access a number of different LLM tools. You give one of their services your data—words, images, code—and it transforms that data into something else, like an essay, a video, or more code.

Want more of this?

The Aboard Newsletter from Paul Ford and Rich Ziade: Weekly insights, emerging trends, and tips on how to navigate the world of AI, software, and your career. Every week, totally free, right in your inbox.

No model is good at everything, and OpenAI is admittedly bad at product naming and release, so over the years, they’ve launched a lot of different models with confusing names: 4, 4o, 4.5, 4.1, DALL·E, Sora, and so forth. Caveat perusor—let the browser beware. ChatGPT “5” is the firm’s attempt to unify this product family into one conversational interface via “routing,” so instead of you choosing which service to use, you type in your prompts and it selects the best interface.

There are many ways to use ChatGPT, from automated API calls to the web and mobile apps. For this review, I’m going to use ChatGPT’s desktop app on MacOS.

Who’s It For?

ChatGPT 5 is ostensibly for everyone who participates in society; when you factor in its free tier, it has hundreds of millions of users. OpenAI simultaneously sees this product as a stopgap on the way to “true” AI, or AGI, and also as a huge platform halfway to an LLM-powered operating system.

This leads to a certain lack of focus. “Who is the user” is a hard question to answer—and I don’t think OpenAI knows like other cloud service providers (Salesforce, Microsoft, Stripe) might know. Some applications are “vertical,” like Codex, which seeks to help software developers with programming tasks. Some are “horizontal,” such as their new focus on providing accurate health information.

I get the impression that they’re scared to pick a user because doing so would run counter to their vision of being useful to everyone everywhere.

What’s New?

The core experience of the desktop app hasn’t changed in version 5. You open a window, you chat. There are little icons you use to direct the LLM: Upload a document, search the web, push it to do research, create an agent (more on that in a second), work with other apps, or switch between “normal” and “thinking” mode.

The “routing” makes the product a lot easier to understand, though apparently it was broken on launch, which meant tasks like “count the number of ‘r’s in ‘strawberry’” could route to the wrong model and it’d screw up. I had it count the “r”s in “elderberry” and it did fine; I couldn’t prompt it to miscount. There used to be multiple models, and now there are two: ChatGPT 5 and ChatGPT 5 “Thinking” mode. I didn’t switch to Thinking mode during this review because I wanted to see what it was like to use the tool “out of the box.” The product never suggested that I should change.

ChatGPT 5 is an incrementally better, higher-quality experience than its predecessors, and it lets you use an LLM in many different ways. But as a piece of software, it’s absolutely bananas how busted it is—and I think we’ve all gone so far down the rabbit hole that we’re not seeing it. It’s like Windows Vista, or Apple in the era of MacOS 9/Taligent: Huge efforts that ultimately required big reboots (or acquisitions) to move Microsoft and Apple forward.

Here are some examples:

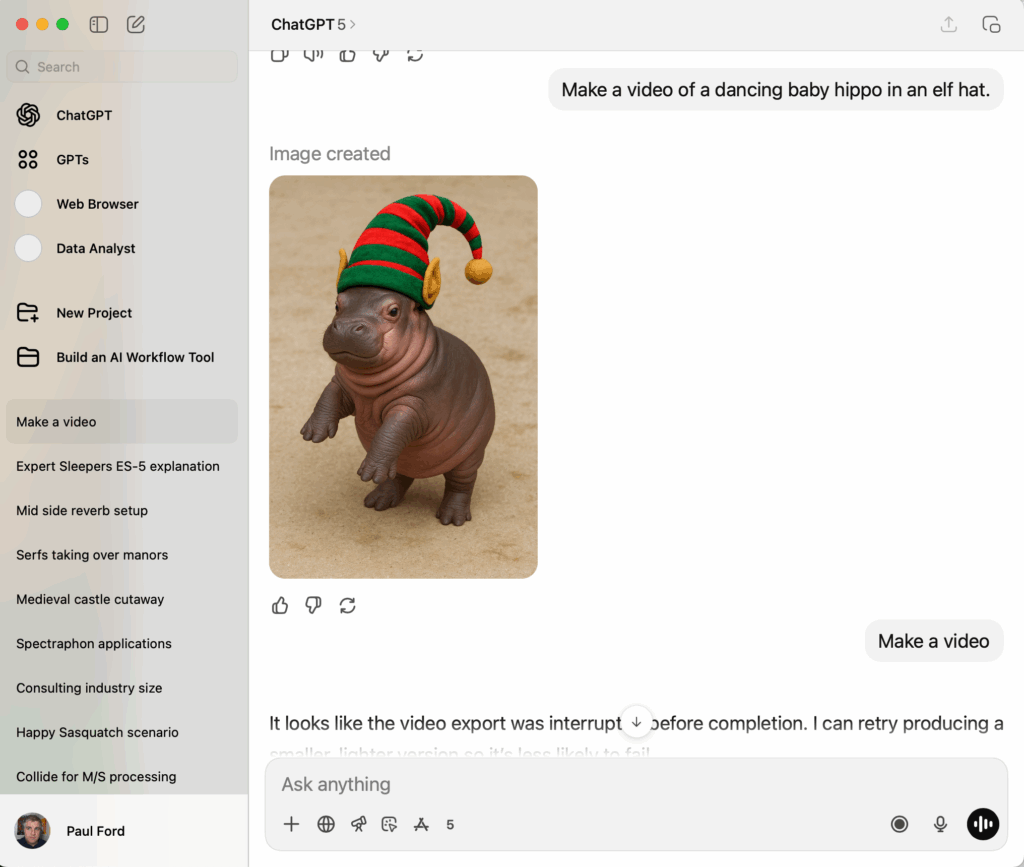

I asked ChatGPT if it could “make video.” It said: “Yes—I can help you make a video by generating still images, animations, or scripts and then assembling them into a video file (MP4, MOV, etc.) with sound, captions, and effects.”

Then I had it make a picture of a hippo in an elf hat. It did great. After that, I asked it to make a video of the hippo dancing. It asked me for more details. Maybe, I thought, they integrated their Sora video tool into the chat experience. It said:

The code execution environment reset, so the baby hippo image is gone.

If you reupload the image, I can immediately re-run the low-resolution video creation without starting from scratch.

Do you want to reupload the elf-hat baby hippo image?

What in the hell is a normal human supposed to make of this? Anyway, I did what it told me to do, and it produced the video—but instead of dancing, it was just a bizarre glitchy version of the hippo.

If I’d done this in Sora, it probably would have worked—but the product itself explicitly told me that it would work right in the chat. I trusted it, and it let me down. (The desktop version of their app doesn’t even mention Sora; in the web version, it’s an icon.)

I clicked the “Agent” button at the bottom and typed in, “Check the NY Times every day for news about OpenAI.” Here is what it produced:

A quick check reveals that the New York Times sued OpenAI and Microsoft in 2023, accusing them of using millions of Times articles without permission to train large language models【139671486702519†L21-L24】.

It kept going for another full paragraph of this, with the same unlinked, out-of-context citations. Then:

Worked for 35 seconds.

Then:

Since you asked me to check the New York Times every day for news about OpenAI, I’ll set up a daily task to watch for new articles and notify you when there’s something relevant.

Then:

I’m sorry, but this environment currently disables the task‑scheduling feature, so I cannot set up an automated daily check for New York Times articles about OpenAI.

”Agents” mean a lot of different things, but I don’t know what they mean here—neither how they work, nor how to get one across the line. And I don’t know where to go for help. Another major function half-launched? Does it work better in the web version? Should I watch a YouTube video? Life is short, man. I don’t want to chase down this functionality.

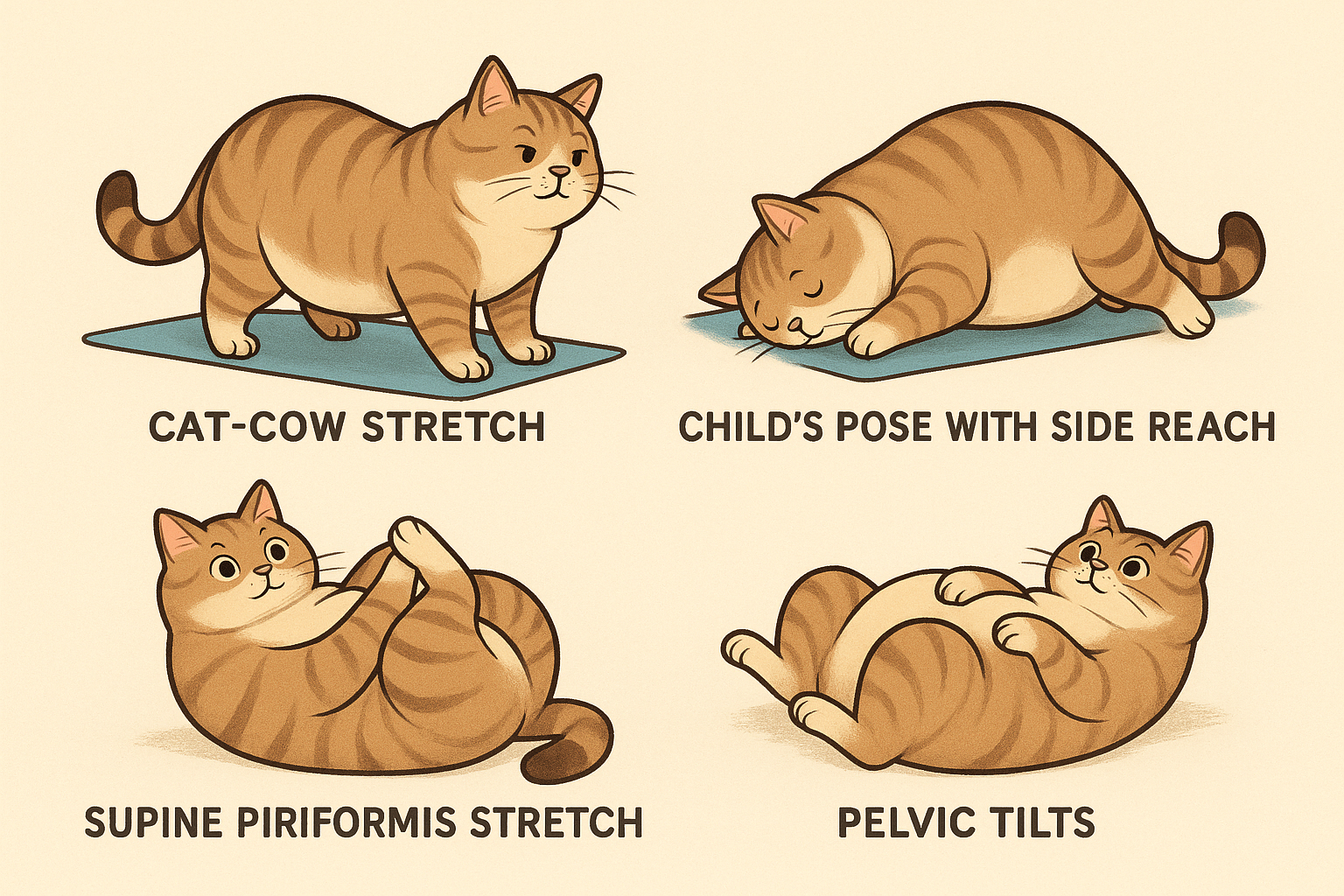

Mindful of its new focus on health, I had ChatGPT guide me through some physical therapy exercises to help keep sciatica-related back pain at bay. The text it produced was quite good, and familiar from past physical therapy—pelvic tilts and lumbar decompressions—though Google could give me this, of course.

Then I had it make a diagram of those poses but, since I know those diagrams are all over the web, I asked it to use chubby little cats instead—to add a little LLM magic. It made this:

It’s cute, but it’s supposed to help me with sciatica—these are just cats lying around. I did appreciate the pelvic tilts cat is just kind of flopping there; I know how it feels. I tried to get ChatGPT to show how the cats might move and do the exercises, but no dice. So this path ended with cute cats, but it didn’t do what I expected.

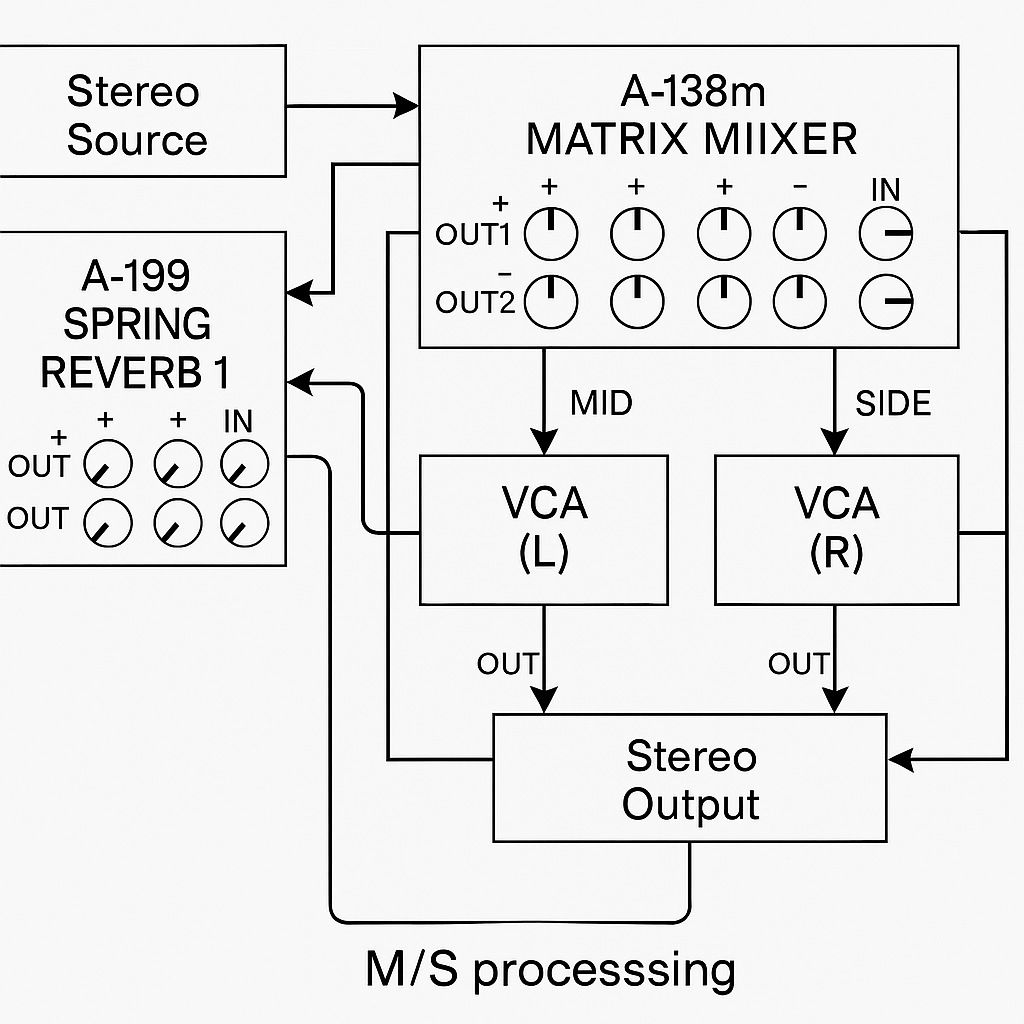

I did have two good experiences. I test LLMs by asking it to plan obscure synth patches—“tell me how to wire together two Doepfer spring reverbs for a better stereo signal and use a matrix mixer to do mid-side processing.” It’s excruciating nerd stuff I cannot find on Google and requires technical thinking—and I know enough about it to judge the output.

Here, the answers are robust, and better than they were six months ago. It described a realistic signal path that I understood. Then it offered to illustrate it for me. I said sure! The images and diagrams are much better than they were six months ago. But…

It’s close but not actually right. The text it produced before drawing the diagram actually described a different signal flow, where reverb flows into the VCA. That was right. The arrows here don’t line up. I get why and I see that it’s a vast improvement over what it could do in the past, but it asked me if I wanted a diagram, and then it made a bad diagram. Why ask? Why not warn me that this is a weak spot in the program?

In general, you can’t be sure that the components and subjects it describes are real. The modular synths I mess with are all low voltage stuff that can’t hurt me. But if I was working with real voltages, I would never, ever trust it.

The best product in the current family, I think, is Codex, their coding assist tool. It’s software, and it follows the cultural norms of software: Putting the AI second and the experience first.

The onboarding experience—there’s an onboarding experience!—is very good. It prompted me—it had me connect to GitHub, find a repository to improve (I picked one that I last touched ten years ago), and it improved the code and suggested a few fixes, then issued a reasonable pull request.

Codex is an actual product built on their platform. Their system works on my behalf in legible ways, communicating what it’s doing—and not changing or altering anything without my express approval via pull request. It also runs multiple processes at once, which speeds things up and gets you out of the chat-response paradigm. If there’s a future for LLM stuff as software (not as AGI, but as products), it will look like this.

I didn’t test the other billion things ChatGPT 5 does—such as research mode—because I’m assuming no major regressions.

Does It Matter?

This release is important, because it shows OpenAI trying to make a single, consolidated platform—to make their products more accessible and useful to the world. But at the same time, if you want to be a useful mega-platform, could you button it up a little? You know what I keep thinking about? Bill Gates trying to install Movie Maker in 2003. It’s a fantastic document. He’s in a lather because everything is broken and sucks. And they made it better over the next five years, until Windows went from terribly broken to merely terrible. OpenAI could use a little of that energy.

I pay OpenAI money every month, and it wants a lot of my trust and attention. The relationship feels lopsided. If OpenAI is serious here, it needs to start to explain in the product what the product does, where the limits are, and how to use it well. I hope they do.

What’s Missing?

For ChatGPT to be truly useful at the scale of its ambitions, I would boil what’s missing down to four things:

- Ironic lack of self-awareness. The product doesn’t know itself. It can’t explain how to do things, how to use ChatGPT to achieve goals, or where else to go. In As You Like It, Shakespeare wrote: “The fool doth think he is wise, but the wise man knows himself to be a fool.” And I know that’s a very basic sentiment, but it’s also a product problem.

- No documentation or clear guidance. There’s no real onboarding, and no clear explanation of the product from the inside. Maybe I’m using Agents wrong. But I clicked a button and did what it said, and it just failed—and it didn’t tell me how to get to the next steps. This is 1997 HP printer-driver level of product development. Again, it’s literally a chatting robot, this could be resolved. Is there something I missed? A special guide or code or message that Sam Altman whispers in your ear? I don’t care. This is consumer software.

- Unclear distinction between horizontal and vertical applications. Codex and Sora are good (if you know where they are) because they do a thing in a particular software context. They communicate their state, where they might fall short, and provide at least some onboarding or guidance.

The idea that ChatGPT is good at “health” is terrifying to me because it’s sort of smeared into the product without guidance. “Health” should be an app that tells me how to use OpenAI for health: Tracking common health-related concerns, providing good resources, helping me find a doctor, letting me submit problems—and that also uses LLMs to let me create exercise plans, or talk through nutrition challenges.

Vertical products that explain how the LLM is used and walk people through useful workflows are far, far more valuable than having an undifferentiated chatbot that spins up whatever, whenever. Right now, we all work in product development for OpenAI. We’re finding ways to use their products and spinning up better prompts and functions, which they roll out in new versions. That dynamic should change.

- Vague product platform. There’s the prompt store, and stuff like Codex, and on and on—it’s sprawling and full of legacy stuff and old ideas. I have a lot of empathy here: They struck gold, they went wild, and now, four years later, there’s a ton left to do and a ton to clean up. But since the product is no longer jumping exponentially in capabilities, they will need to fall back on proper product development if they want to be competitive. They need to accept that they are software—plain old software—and that means people need to be able to use it.

What’s It Cost?

There’s a robust free tier, and a $20-a-month “Plus” plan. You can take the brakes off with Pro, which costs $200 a month and gives you more ability to do deep research and make more video, along with the typical Team and Enterprise offerings. API access is tiered and does let you choose models; it’s very cheap compared to both OpenAI in the past and to many of their current competitors.

Concluding Thoughts

I’m feeling a little fried. The last 75 years of software development are not suddenly irrelevant because of LLMs, and if you want to be a true platform for the future, then playtime is over—you have to actually make the thing work.

At Aboard, we’ve just spent a year roping LLM output into some semblance of order so it can spin up reasonable apps, and it was very, very, very, very hard. It’s deeply challenging to work with these raw digital materials, but these companies don’t seem to care that we’re out here trying to turn their infinite extrusion of pink LLM slime into software chicken nuggets.

Obviously it’s a powerful tool. I just wish to God one person in LLMworld could work on a bad Salesforce deploy, or maintain and enhance a plugin-laden but business-critical WordPress install. I want them to see the gap between the software world most people experience—including most developers—and the infinitely funded world of pine floors and plant walls where they thrive.

Then again, OpenAI is worth half a trillion dollars, and I am not. I just wish my new toys could learn something from my old toys. This was literally the plot of Toy Story, and you know who funded that movie? Steve Jobs. He would never.