Four Terror-Free Ways to Talk About AI

New technologies are hard for humans to grasp in the best of times, and the tail of 2024 is not the best of times.

You have to read all of these.

One of the more thoughtful resources on what AI is doing to organizations is Ethan Mollick’s newsletter, “One Useful Thing.” Mollick is a professor at the Wharton School at the University of Pennsylvania, which we must not hold against him. His newsletter is not academic in tone, but does have an academic thoroughness that I appreciate.

His most recent edition was about AI adoption:

A large percentage of people are using AI at work. We know this is happening in the EU, where a representative study of knowledge workers in Denmark from January found that 65% of marketers, 64% of journalists, 30% of lawyers, among others, had used AI at work. We also know it from a new study of American workers in August, where a third of workers had used Generative AI at work in the last week….Yet, when I talk to leaders and managers about AI use in their company, they often say they see little AI use and few productivity gains outside of narrow permitted use cases. So how do we reconcile these two experiences with the points above?

Emphasis mine. His explanations resonated with my experience so far—the way I see it, AI just hasn’t made the big time yet from an organizational perspective. It’s a pattern we’ve seen many, many times before: The random Linux server in the IT closet, 20 years later, becomes Amazon Web Services, leased by the minute. The tiny brochure-style website, 25 years later, is now the driver for much of the company’s growth. A small group connects on Slack, and a few years later, all 30,000 employees across the company rely on the platform. The great fantasy of every marketer is that you can speed this process along, or make it happen—but change is hard for humans, and even harder for bureaucracies.

Want more of this?

The Aboard Newsletter from Paul Ford and Rich Ziade: Weekly insights, emerging trends, and tips on how to navigate the world of AI, software, and your career. Every week, totally free, right in your inbox.

Mollick’s piece made me think about how I’m talking about AI with businesspeople (and not-for-profit and education people, too). Here are four principles I’m working through:

Be the Boss. This has now happened a few times—someone will ask me, a human, for advice on building a software product. Then I will open up ChatGPT, not a human, and use my best prompting: You are a senior software architect. Define the full plan for building [describe thing here]. Include a complete breakdown of the sub-system and tools you’d use. Prefer open-sourced, highly-vetted tools.

It will produce a thousand words, organized in bullet points, and frankly, it’s very good. I’ll throw that into an email and then go line by line, giving feedback on it—just riffing, looking for ways to cut scope, prompting further conversation.

I’ve hired many software architects, and, along with my co-founder Rich, I’ve reviewed their work and offered lots of feedback. It feels right to me, then, that I ask the AI to do something I can evaluate and react to as a very human boss. It accelerates but can be kind of extra; I add value and suspicion. Everyone wins.

Read everything. Related to the above—as I’ve messed with coding projects, I am finding that ChatGPT and Claude are brilliant little stochastic buddies, but also that every fiber of my being just wants to cut-and-paste their slop into my code editor in the hope that I can avoid any actual effort. This never works, any more than cutting-and-pasting from Stack Overflow worked. Things break, then you ask the bots to help you debug, and it screws that up, and you have no clue what’s going on. You’ve given up agency. The answer is simple: Don’t. You have to read everything, and you have to think. Sorry. If an AI makes something and you don’t have time to read it, you’re using the technology like a chump. Or to put it more simply: Not only do you have to be the LLM’s boss, you have to micromanage, too.

Build pipelines, not prompts. Everyone likes to point out the magic tricks. But this technology works best as a sequence of transformations that are guided by a lot of thought. For example, in coding, you ask the LLM to break down the problem into an architecture, then you can go piece by piece and break each bit down even further. Maybe even generate some diagrams. Once you fully understand all the pieces and have a mental model, you can ask it to convert those descriptions into code and build an application from there—ideally with unit tests and docs. The merit of this approach is that it seems to actually work, as opposed to just typing “build me a Facebook clone,” which might spin up a bunch of code but doesn’t actually work for the long haul. The other merit is that, once you find pipelines that work they’re very re-usable—i.e., the guidance you give to create one web component can be applied to hundreds of others. This is…process. Which is something organizations love and understand.

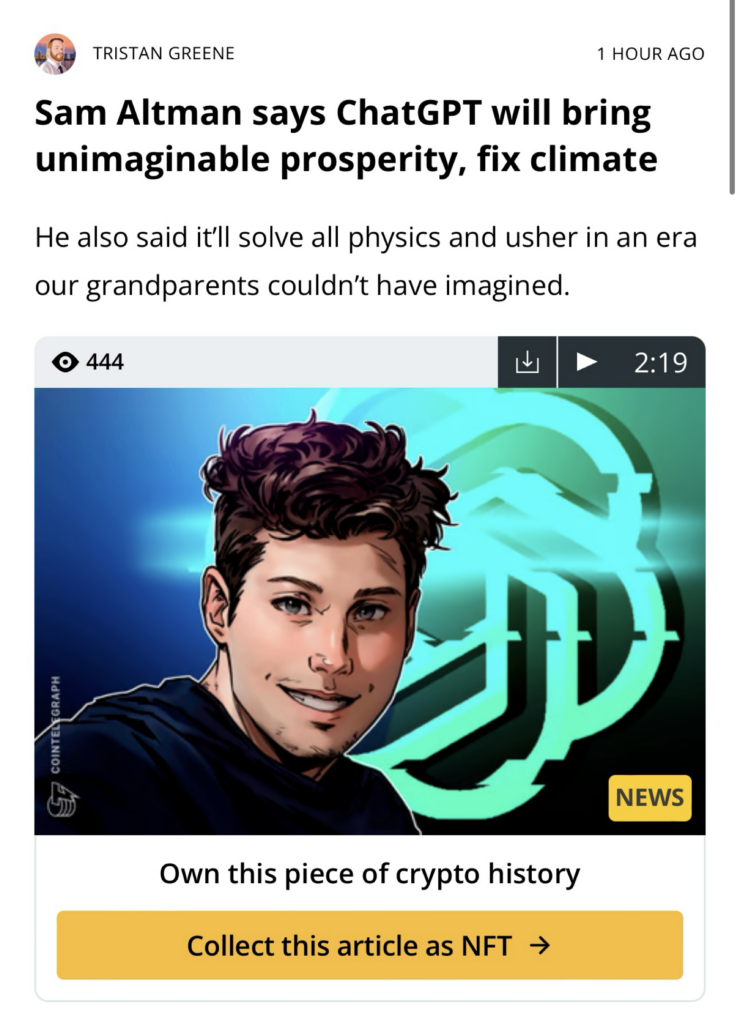

Acknowledge anxiety. New technologies are hard for humans to grasp in the best of times, and the tail of 2024 is not the best of times. Add to this that AI arrived, alas, pre-cancelled—an ecological and cultural nightmare of content spidering and alleged thievery, dropped into a roiling, partisan world, heralded by a bunch of billionaire dorks who constantly threaten that they’re constructing Robot God. I’m finding that it really helps to speak to the Heffalump in the room. At our recent climate-focused event I put this image up on screen:

Not only is Altman claiming LLMs (which need nuclear-level power supplies) will fix global warming, you can even mint the article itself as an NFT. It’s like if an oil-well fire was an article. People are very curious about what is happening. (Note that Oprah Winfrey recently did a special on AI.) But they’re also very, very suspicious. You need to speak to the real risks—not just the speculative AGI risks—openly, maybe even cheerfully. But at the same time, it’s wild to see something this new enter the world, and realize that no one knows exactly how it will work out, or what’s going to happen next. It’s good to pay attention.