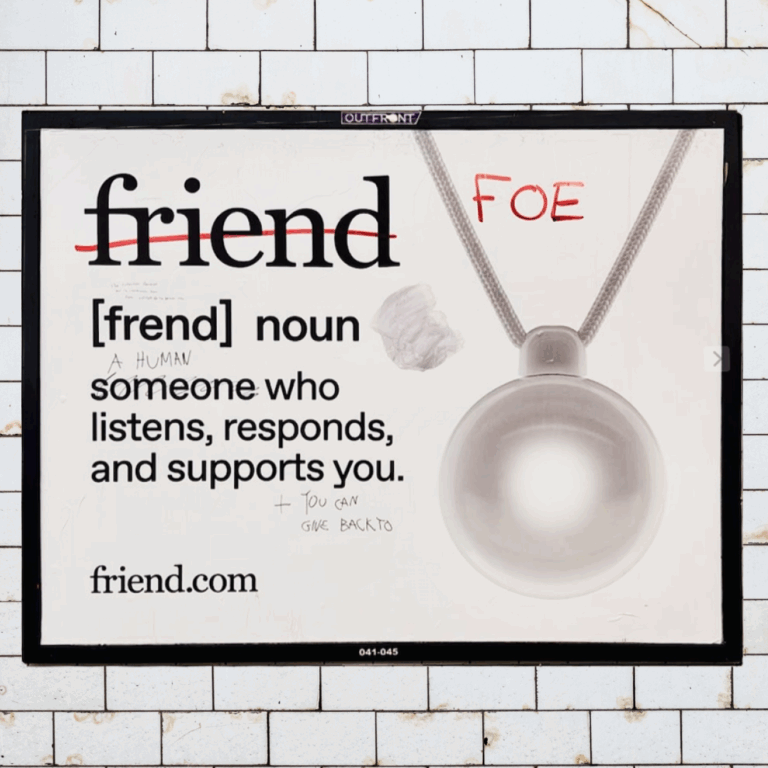

NYC Sends AI Packing

All over the New York City subway, ads for the AI wearable “Friend” are being defaced. It seems clear that New Yorkers don’t want what Silicon Valley is selling—but will the general consumer bite? On this week’s Aboard Podcast, Paul and Rich assess the tensions between big tech and the public, in New York and beyond. After decades of having our data be packaged and sold, will anyone want to wear a necklace that listens to them 24/7? Plus: Paul describes what his skincare routine would be like if he were a billionaire.

Show Notes

- Friend.com, or you can watch the trailer, which is supposedly a positive ad for the product and not a teaser for the new episode Black Mirror….

- There have been a lot of less-than-stellar product reviews, but above all we recommend this recent piece from two WIRED reporters who tried the device, “I Hate My Friend.”

- “Tech Billboards Are All Over San Francisco. Can You Decode Them?”

Transcript

Paul Ford: Hi, I’m Paul Ford.

Rich Ziade: And I’m Rich Ziade.

Paul: And this is The Aboard podcast, the podcast about how AI is changing the world of software. Rich, how was your weekend?

Rich: It was lovely. I was in the woods.

Paul: So was I. We went up to the woods and hung out a little bit together.

Rich: Yes, it was nice.

Paul: I refer to this as renewing our vows as businessmen.

Rich: That’s adorable.

Paul: It’s very cute.

Rich: Businessmen.

Paul: Businessmen. So when you were up in the wilds of New York state?

Rich: Yeah.

Paul: Did you see any advertising for AI products?

Rich: No, but I did see Jim’s HVAC Services.

Paul: A lot of that. I saw a big sign that said, Vote Republican: It’s the way.

Rich: Yeah, yeah.

Paul: And I heard coyotes at night, which, those two things are related, but we won’t go into that.

Rich: It’s very creepy.

Paul: So let’s stop talking about talking and start talking.

Rich: Let’s go!

[intro music]

Paul: All right. So we just got back, and you hit New York City, and you start to see ads everywhere.

Rich: It’s New York City.

Paul: You take the subway.

Rich: I do take the subway.

Paul: I take the subway every day, unless I’m biking. And there is this thing, okay? It is a round thing, and it is called Friend. And if you go to friend.com?

Rich: Uh huh…

Paul: It is a pendant that you wear.

Rich: Okay.

Paul: It’s a digital pendant.

Rich: Around your neck?

Paul: It’s kind of grey.

Rich: Okay.

Paul: And it listens to everything you’re doing.

Rich: Without you activating it? It’s just listening to you live your life.

Paul: It’s kind of always there. And it talks to you like a friend. So you—

Rich: Wait, seriously?

Paul: Yeah.

Rich: Like, it talks to you…?

Paul: It talks to you through your phone. It’s like this little AI sensor that picks up all the audio around you. And…

Rich: Okay.

Paul: And then it sort of is like, “Hey!” And, you know, you, you talk to, you’re like, “Wow, boy, that was a hard run.” But it’s like, “Yeah, but we’re outside! It’s cool!”

Rich: “And we’re together and I’m your friend.”

Paul: “I’m your friend—I’m your little grey babe AI friend.”

Rich: People have given a go at this, right? Like, there’s the rabbit thing that came out, like, two years ago. It was too early, and it was a sloppy mess. People are trying this right now.

Paul: Oh, yeah, yeah. That was a bad one. I vaguely remember that.

Rich: And then Jony Ive and Sam Altman got together, and they’re working on some secret projects. There’s a lot going on.

Paul: I feel, though, that everyone has erased Aibo from this conversation. Remember Aibo?

Rich: Which one is that?

Paul: That was that little dog from Sony about 25 years ago.

Rich: I remember that.

Paul: No, that was good stuff.

Rich: It was ahead of its time.

Paul: It really was, because it broke all the time. It didn’t really work. And people were very confused by it. So that’s also the case with how a lot of consumer AI products are landing.

Rich: I mean, when new tech lands, everyone kind of lurches forward and overreaches and fails a couple of times before they get it right.

Paul: So Friend is $129. Everybody is sort of thoughtful and wearing a sweater and kind of hot on their video—

Rich: $129?

Paul: $129 for a subscription, because you can’t just buy anything anymore. It’s not—

Rich: You’re using their servers to store your life.

Paul: Absolutely.

Rich: And then they’re using—

Paul: Oh, no, sorry, sorry. No, I got it all wrong. It’s $129 with no subscription.

Rich: Okay.

Paul: Okay. So it’s just a little friend that you buy. Look at that.

Rich: That’s cheap.

Paul: Yeah. Okay.

Rich: They’re gonna have to make money some other ways. Like selling your life data!

Paul: Yeah, that is a little tricky because you’re gonna be getting—

Rich: Anyway.

Paul: So, you know, there’s also—where are my memories stored? “They’re encrypted by your Friend’s circuit board. If it’s lost and damaged, they’re inaccessible forever.” So I think this is a real attempt to build a ChatGPT personal experience, like a sci-fi kind of thing.

Rich: Okay.

Paul: And so this is a very Silicon Valley idea. Like, “Hey—” And even here. “We’re going to encrypt your stuff and we’re going to make it a little bit waterproof and everything’s going to be okay.”

Rich: Okay.

Paul: “And we’re going to follow the rules. But you’ll get access to this little robot guy that will use ChatGPT or whatever and it will help you have a more fulfilling existence.”

Rich: Fine.

Paul: And New York City, so they went, I guess they really wanted to break in here.

Rich: Yeah.

Paul: And so they blew a million bucks on subway ads, which is a lot, on subway ads.

Rich: A million dollars on subway ads in New York City.

Paul: Yeah. In New York City.

Rich: It’s plastered all over…

Paul: So they—

Rich: The tunnels.

Paul: Oh, they’re everywhere. It’s this little pendant and it’s, like, lots of little definitions of the word “friend,” and—

Rich: Okay.

Paul: And so, you know, they kind of made their move and it kind of didn’t, it’s kind of getting a lot of pushback because it’s—

Rich: Huh.

Paul: People are just writing, like, you know, “surveillance capitalism, get real friends,” across the ads. Like, you’re just, you’re kind of asking for it when you do this here, to be frank. Like, there’s an element of that.

Rich: I mean, I remember for, this is extremely New York City. I’m sure other cities, it happens as well. But as far as I can remember, when, like, a movie poster ads used to be very popular in subways.

Paul: Yeah.

Rich: Promoting a movie. And within—

Paul: That’s, like, an invitation.

Rich: Within 20 minutes, Tom Hanks has devil horns.

Paul: Devil horns—

Rich: And a tongue sticking out of his mouth.

Paul: Yeah.

Rich: No matter what the movie.

Paul: It’s a lot of little, lot of little nipples get added.

Rich: A lot of nipples get added. [laughing] Yeah, so listen, advertiser beware if you’re going to put it on the subway. Now, it turns out this is hitting a particular nerve, though, is what you’re saying.

Paul: New York City doesn’t love AI, just not really.

Rich: Oh!

Paul: It’s one of the reasons why we keep doubling down and, like, why we built a nice office and so on.

Rich: Yeah.

Paul: It’s part of our ethos, is we know this city does not love this technology.

Rich: Interesting. You’re saying that definitively here.

Paul: Yeah, I really do believe that.

Rich: How come?

Paul: The city has an identity, and it’s a lot of different people sharing that identity in really different ways.

Rich: Mmm hmm.

Paul: But there is a commonality to it. And sometimes it’ll just be sports teams. But you can get a Wall Street guy and you can get, like, a Canarsie guy, and you can get them to fight about the Mets.

Rich: You can.

Paul: And it’ll be a pretty good conversation. And they will kind of like and respect each other and see each other as New Yorkers. Right? And everybody takes the train. Everybody. Even if you’re rich.

Rich: Yeah.

Paul: You’re very, very weal—unless you are, like, helicopter rich, you kind of take the train to get around because otherwise it’s—

Rich: It’s just not convenient any other way.

Paul: Yeah. So in order to succeed and thrive here, you engage with the systems of the city. Right?

Rich: Yeah.

Paul: And then you have this thing—and it’s a social place. Like, it really, like, it’s probably the most social place in America.

Rich: It’s social, but you really, there’s sort of an unwritten rule that you’d be left alone in your own space, whether you’re on the train or in your apartment. So I think that’s part of it.

Paul: I’ll tell you. This is not a great place for, like, Google Glasses.

Rich: No.

Paul: Right? Like, we don’t, we don’t like those.

Rich: No, no.

Paul: Yeah.

Rich: Everyone’s running and they want to be left alone, to some extent. It’s a helpful place. It’s a neighborly place, like, New York City, there’s a warmth to it. But, boy, when you’re doing your thing, you don’t want anyone in your business.

Paul: This is real. If you, like, faint on the train, 10 people are gonna help you.

Rich: Yeah, yeah, yeah.

Paul: People emphasize the stories where, like—

Rich: But don’t make eye contact on the train.

Paul: Just let—everybody wants to go about their day. You know, the trickiest one in New York is when somebody’s just sobbing openly on, like, on the train.

Rich: Yeah.

Paul: I’ve seen this a couple times.

Rich: Yeah.

Paul: Usually I’m the cause of it.

Rich: Yeah. That’s funny.

Paul: And you go up and you say, what you can do is you can just go be like, “You okay?”

Rich: And then they’ll be like, “Yeah.” And then you leave.

Paul: And they’ll be like, yeah, they’ll just kind of nod. They’ll do, like, a sad, like, mini nod.

Rich: Yeah.

Paul: And you’re like, “I hope it works out.”

Rich: Yeah.

Paul: And then you walk away. And, like, that’s it. That’s the—like, they’re allowed to sob openly, by the rules of the city.

Rich: Yes.

Paul: You can have any kind of emotional meltdown.

Rich: Yes.

Paul: You can even be, like, partially naked.

Rich: Yeah.

Paul: Right? A little less on the public display. Like, we want it, we want it locked down. We don’t want people, like, when people, like, necking on the subway, it’s kind of gross.

Rich: Yeah.

Paul: But basically, almost anything is good. So you got this little observing guy on your neck.

Rich: That’s the thing. I think that’s what you’re hitting on here, which is, here’s what you can’t do on the subway. Let’s say you’re a tourist. You’ve come to New York. You’ve taken the subway. You’re taking in New York City.

Paul: Yeah.

Rich: You can’t walk up to anyone and say, “Will you be my friend?”

Paul: Ooh, that’s bad, right?

Rich: It’s bad. It’s just not—you can do that, I’ve been in other places.

Paul: That’s, like, when they try to sell you their CDs, too. Like, it’s just, you just, there’s certain boundaries, really. Don’t, don’t do that.

Rich: Don’t do that. Right? And so, and I think New York, that’s sort of a pact that everyone has that you just leave me alone. Like, it’s already crowded enough. Just give me my 2 square inches and leave me alone. You can go to a lot of other places, by the way, and you could just chat up a room. You could walk into a diner and say hi to everyone.

Paul: There are rules here. You can be like, if somebody’s, like, wearing a band t-shirt, you can be like, “I saw them a couple of years ago. They were great.” And they’ll go—

Rich: It’s very limited.

Paul: Yeah, man, they were great.

Rich: And that’s that. Yeah, exactly.

Paul: And you’re like, “Okay, cool, see you later.” And then you, honestly, then you say goodbye when you get off the bus.

Rich: So anti-surveillance, anti-personal space—I mean, pro-personal space. That makes a lot of sense to me now. That’s resonating. When you say New York doesn’t want little doodads tracking everything, that’s a real New York thing.

Paul: I think it’s not just that. I think you’ve got the publishing industry and a lot of big anchors of the media industry, the outposts are here. The television networks and what’s left of them.

Rich: Yeah.

Paul: Cable, et cetera. Those are worlds really focus—we’re around the corner from HBO.

Rich: Yeah.

Paul: And so, like, people are a little touchy about this. The constant statement that we will be able to replace everything you do at the prompt.

Rich: Yes.

Paul: Right? In a funny way, our industry, which is, we’re trying to—we’ll talk about what we do in a second, but we’re trying to replace a lot of engineering and consulting work using AI.

Rich: Yeah.

Paul: Right? But people are a little less threatened by that because that industry kind of comes pre-threatened. Like it’s always changing.

Rich: Yeah, yeah.

Paul: But, you know, you—

Rich: You’re making a good point, which is a lot of traditional labor and traditional skills are in New York City.

Paul: Banking.

Rich: Insurance.

Paul: Very—

Rich: Finance.

Paul: Research-driven, right?

Rich: Research-driven. Marketing.

Paul: I mean, let me, let me throw another one out. A huge industry in the city, we don’t always talk about, there’s two: Government and education.

Rich: Sure.

Paul: You know, I can tell you for a fact, I can look you in the eye right now and I could say, I bet the MTA could use AI in how it does its coding.

Rich: Yeah.

Paul: How much money do you think they could save a year?

Rich: Many millions.

Paul: Millions. Like, probably tens of millions all in if they really—

Rich: Easy.

Paul: But you think about what it would take to make change inside of that organization?

Rich: Correct.

Paul: Or inside of City Hall? There’s just a lot of resistance, but also a lot of opportunity at a time of real stress.

Rich: Yeah.

Paul: So I feel that there’s a lot that this technology has to offer. But I also think there’s really justified pushback.

Rich: Yeah.

Paul: And being here, it’s just kind of thorny. Most of the people in my neighborhood just fricking hate AI.

Rich: Oh, is that right?

Paul: Yeah.

Rich: Okay.

Paul: Well, they all use it.

Rich: Yeah, yeah, yeah.

Paul: Yeah.

Rich: Let me ask you this.

Paul: Okay.

Rich: Back to this ad. So what’s happened?

Paul: Oh, nothing’s happening. It’s just that I’m gonna give you some numbers.

Rich: People are scribbling on it.

Paul: Yeah. You know this—well, they’ll probably spend another million on something else.

Rich: Okay.

Paul: When you can buy friend.com?

Rich: Yeah. You’ve got a lot of capital to spend.

Paul: Venture capital has given you a thumbs up.

Rich: Yeah.

Paul: Yeah.

Rich: Yeah.

Paul: All right. So according to the AI overview provided by Google, which I’m just going to trust for the purposes of this podcast because it’s a boring subject.

Rich: Run with it.

Paul: Global AI advertising market size. Like, not ads made with AI, but just advertising AI products. Okay?

Rich: Yup.

Paul: Last year: $20.44 billion. This year: $47 billion.

Rich: [whistles]

Paul: 2028: $107 billion in 2028.

Rich: In advertising—

Paul: In spend.

Rich: To get people to adopt AI.

Paul: So let’s think about that. They spent $1 million for Friend. They got the whole subway.

Rich: Yeah.

Paul: Okay? Multiply that by a thousand. Multiply that by 100. Okay? And that’s how—you just, like, we are due for Sam Altman’s face being directly beamed into our eyeballs.

Rich: Yeah.

Paul: For the rest of our life.

Rich: Yeah.

Paul: And so—

Rich: Is there a breakdown? Like, is it, I’m sure a lot of it is OpenAI, but…

Paul: I’m not asking Google AI fricking thingamajig to tell me about, to break that down. It’s just gonna make—

Rich: It’s a massive amount of money, right?

Paul: Yeah, yeah.

Rich: I mean, and I think, look, I don’t know a lot about how you rationalize the economics of advertising. What I know is this, if I can get you in, you ain’t coming out. Right? I think that is the bet that people make.

Paul: Well, I mean, this is—

Rich: The retention value of a user of AI is absolutely massive.

Paul: Let’s talk about this for one minute. And actually to give you a good example of how confusing it is to New York City, like, the New York Times actually wrote an article about how tech billboards in San Francisco are confusing. Like, we just don’t know what’s going on.

Rich: Mmm.

Paul: Right? So, but, like, breaking it down for a second, right, like, that lock-in point is really interesting because it’s real frothy right now. And at the same time you’re starting to see the giants get their heads together. So I’ll give you an example: If you use Google AI, not those summaries at the top, but you click the, like, little AI button in the—

Rich: AI Mode.

Paul: AI Mode, and you ask you to do, I was looking for a messenger bag.

Rich: Yeah.

Paul: Right? And so I was like, okay, and I used ChatGPT and I use this as a way to compare the different services.

Rich: Sure.

Paul: Perplexity. ChatGPT. The Google messenger bag, it’s way more consumery. It searches zillions of sites.

Rich: Sure.

Paul: But my God, it is right there and it is, like, ready. It is ready for commerce.

Rich: Google.

Paul: Google, yeah.

Rich: Yeah.

Paul: The ChatGPT shopping experience is very buttoned up. It’s almost Apple-style. It’s very nice.

Rich: Mmm hmm.

Paul: And it does a lot of work and it sort of, like, gives you good advice. But the Google one is, like, “I know you want to get to the mall right now. Let’s go, let’s go!”

Rich: Yeah, yeah.

Paul: “Get in the van.” And when we were talking about lock-in, that’s what we’re talking about. The giants are about to start, like, getting to, it’s getting to that consumer level where people are going to start to make like $0.10 per transaction enabled by AI because somebody wants a messenger bag.

Rich: So let me ask you a question. Most of AI—by the way, ChatGPT sprinted ahead and they dominate usage today.

Paul: Mmm hmm.

Rich: Like, that’s just life. They were first out.

Paul: They dominate, like, paid AI-as-a-service usage. But I’m gonna bet Google is increasingly—more people are using Google now.

Rich: Well, that’s the question I wanted to ask. Do you think, ultimately, we’re headed to free?

Paul: Yeah, I do. Because the whole platforms, they all get commoditized faster and faster and faster.

Rich: So even OpenAI, even ChatGPT, is going to be free. There’s going to be a free tier.

Paul: I think, if I want—so here’s what you know, I’m coming, let me bring this back to what you originally were talking about, right, which is these giant companies and smaller ones are advertising like crazy in order to build a moat because they know that pressure is coming.

Rich: Yeah.

Paul: I could probably run the—I can, I can run the equivalent of ChatGPT-4 early days on a laptop.

Rich: Yeah.

Paul: Right. Like, it’s just kind of squeezing.

Rich: Yeah.

Paul: I wouldn’t have all the data, but.

Rich: Yeah.

Paul: Still, right?

Rich: Yeah.

Paul: So this is like, and it’s going to be more and more on phone.

Rich: So to break it down, what you’re saying is if AI service across the street is free and this one’s taking $12 from me and I can’t tell the difference, I’m going to go across the street to free.

Paul: That’s right. And over here on free, I have Google, which is incredibly good at—

Rich: Fast.

Paul: Well, it marches you through a funnel towards a transaction in a million different ways.

Rich: Yeah, yeah.

Paul: Right? Affiliates, there’s just a huge amount of ways for Google to make money—

Rich: Yeah.

Paul: And advertising being sort of one of the big ones.

Rich: Here is what I think is so tricky about this trend and this path. I think you’re right. I think eventually, hitting AI search is going to be free everywhere, right? But as we all know, free is not really free. Your data and your behavioral data is invaluable to give the right answer, right? And Friend is from what you—I mean, we don’t know this for sure, but you looked at the site, it’s $130, and then you’re not paying on a monthly. So there’s gonna be some marketplace for your behavior behind the scenes.

Paul: It’s an interesting gamble, too. I mean they could also assume that maybe they’ll sell something. But yes, yes. No one can just make and sell a product. Unless it’s, like, Scrub Daddy. Everything has to be a data play.

Rich: It has to be a data play, right? And I think what’s—

Paul: Even Scrub Daddy probably has a large data platform.

Rich: They probably have an excellent, excellent CRM behind Scrub Daddy.

Paul: Yeah.

Rich: So this is a question I’m going to bounce back to you, which is the argument for data in the past, to barter your data in the past?

Paul: Mmm hmm.

Rich: Is that I get to know you, and the ads I show you are not garbage. They’re actually things you care about.

Paul: Mmm hmm.

Rich: So you seem to be into cooking. And so I’m showing you cool utensils and frying pans.

Paul: Mmm hmm.

Rich: And that’s good. Yes, I sniffed out that you follow certain Instagram users that cook food. And I just assume that you like cooking. What’s wrong with that? And so now you bring AI into the picture and it’s that times a thousand, meaning AI’s observation of your behavior is so much better than anything we’ve ever had. And so it’s gonna pick out all kinds of things about you. Your eating habits, your lifestyle. It might even triangulate on, like, health risks before you even know it for all we know. I mean, I’m putting this forward so we can have a Socratic discussion here. What’s so bad about that?

Paul: Yeah. Okay.

Rich: I’m gonna save your life, Friend. Friend.com.

Paul: All of that is real. And all of that are things that probably are, and will happen. And they will talk about them during Apple keynotes.

Rich: Ooh.

Paul: Remember when Apple keynote, it was like, man, you know, “The bear came into the house, but the Apple Watch—”

Rich: Saved their lives? Yeah.

Paul: There was that one keynote where—

Rich: A lot of life-saving.

Paul: It’s all about death, right?

Rich: Well, there’s a lot of cliffs in California.

Paul: You know, Tim Cook’s getting older.

Rich: [laughing] He slips!

Paul: We all start to, we look into the void, and we feel we have to do something about it.

Rich: What is it called? Medi-Alert, Medic-Alert?

Paul: There’s just, let’s not—

Rich: The one where you’re, like, an old person alone.

Paul: Oh, Medalert, I think it was.

Rich: Medalert.

Paul: Something like that, where you fall down the stairs and you can’t get up.

Rich: Correct.

Paul: Yeah, We’ve rebuilt that at an infrastructural level using a multi trillion-dollar industry.

Rich: There we go.

Paul: Good for us. Okay, so let me get back to your question.

Rich: What’s bad?

Paul: So I think there will be lots of virtuous things and that will also be kind of the, that’ll be a big part of the advertising and marketing. Here’s what is actually genuinely upsetting and terrifying about advertising-enabled platforms that use AI and are free.

Rich: Okay.

Paul: LLMs as we understand them, and not just from a language point of view, but from image generation and what they search and so on and so forth, are truly able to sculpt things to your interest. And not just that, but generate kind of interstitial narrative. They can fill in blanks.

Rich: Mmm.

Paul: They might fill them, and increasingly they fill them in with pretty good material based on web search and so on and so forth. But you’re not just, like, showing somebody a list of pages that are relevant to their interests. You’re able to create a whole narrative and story for them.

Rich: Mmm.

Paul: Now that narrative and story might be—

Rich: With imagery and language and video…

Paul: Literally—

Rich: Custom-created.

Paul: On a little device that you wear around your neck that tells you what to do next or how it feels about what you’re doing.

Rich: Yeah.

Paul: Right? So Friend is, like, a nice Silicon Valley concept until fundamentalist religious people use it to control their daughters. And now we’re really upset about it.

Rich: Yeah.

Paul: Except the people who really want it think that it’s a great way to get people to invest in the faith that they were raised in.

Rich: Yeah.

Paul: And so, like, all this stuff is coming for us, and the ability—

Rich: It’s been attempted, your argument is sound because it came for us through cruder ways for the last 20 years.

Paul: And we’re very vulnerable. Right.?

Rich: 100%.

Paul: This is a very subtle—I mean, you’re doing Socratic dialogue, but this is sophistry, right? Like, it can be turned into very, very subtle ways to sort of encourage your thinking. And it can, not only that, it can start to show you links and show you things that are relevant to you. It’s very easy, given what we know about social media and how it radicalizes people, to imagine an AI that is coached and trained to radicalize them in the direction of purchasing a Samsung Fridge! Or in the direction of committing a crime.

Rich: Or in the direction of intaking and buying into false information and false narratives that radicalize you and create division and friction.

Paul: So I think what New York City sees?

Rich: Yeah.

Paul: When it sees Friend.

Rich: It sees a bad ending.

Paul: It sees that ending.

Rich: Yeah.

Paul: And I think that when Friend buys the million dollars, it’s the Silicon Valley utopianism kind of saying, like, “No, come on, this is actually kind of cool. It can do a lot of cool stuff for you.”

Rich: Yeah.

Paul: And I think there’s two elements. One is we never were going to buy it, but also, Silicon Valley’s absolutely going banana cakes. And we’re like, “We don’t want anything to do with that,” culturally.

Rich: Yeah.

Paul: Like, we just don’t want that.

Rich: Yeah.

Paul: I don’t want Peter Thiel talking about the antichrist while he just sweats that sort of weird gossamer sweat that he has.

Rich: It’s glossy.

Paul: Yeah.

Rich: It’s very glossy.

Paul: I don’t know why he’s slick. Like, if I had that kind of money, I would never be slick. I’d be matte. I’d be matte.

Rich: A matte finish.

Paul: Yeah, absolutely. Like, an old Mac monitor. You remember how good those were when they were—

Rich: I’d buy it.

Paul: Oh, I’d buy a matte monitor right now. Do you remember them?

Rich: Yeah.

Paul: God, I hate everything being shiny, including our venture capital, billionaire, antichrist-believer bloodsuckers.

Rich: Well, there goes our chances of raising money.

Paul: Yeah, those were shot a while ago. [laughing] Actually, we blew that out when we turned 26.

Rich: Can I close with a point here?

Paul: Somebody’s got to do it.

Rich: Okay.

Paul: Well, what should people do here? We got to advertise our AI company. What are we going to do?

Rich: We’re different. And we’ll get to that in a minute.

Paul: Okay.

Rich: Let me make this point.

Paul: Make a point.

Rich: I think we’ve been gathering data in exchange for giving you free services for a very long time now.

Paul: I mean, to the point that there was art about it in the 80s.

Rich: Yeah.

Paul: Yeah.

Rich: So Facebook, Google, Instagram, all these platforms essentially are free. They are free. I don’t give—I mean, I give Google money for Gmail and Google apps, but for search, I don’t give them money. Never given Facebook any money. Never given Instagram any money.

Paul: No.

Rich: Right? Never, given X any money. And what we found happens is this. You can start—

Paul: Oh, no, not true. We’ve given them lots of money as advertisers over the years.

Rich: No, I mean as users.

Paul: I know, but I’m just making that point.

Rich: Yes, yes, yes, yes, yes. When the narrative starts, the intentions may be benevolent, which is, we’re going to make the world a more connected place and we’re going to use information to get you information that you want and advertisers that you care about and products you want and whatnot. But what we found happens every single time is that malevolent forces, whether it be driven by money or power or whatever, abuse these mechanisms. And we’ve seen it to the point where, like, societies have melted down.

Paul: They’re malevolent now, but they don’t even have to be malevolent. They can just be ideologically relatively indifferent. Like, the amount of positive rule-making, forceful behavior that you need to enact in order to make humans line up is essentially anti-growth. Like, it’s really hard to do both. And nobody really has—

Rich: Correct.

Paul: Except for LinkedIn, because it’s terrible.

Rich: So when I look at Friend? Friend may have a charter that says we will never give our data to large corporations or other governments. And I mean, governments are using data andinfluencer campaigns to divide other countries. Like, it’s literally a weapon at this point.

Paul: I mean, China and Russia—

Rich: All day long—

Paul: —are completely targeting us—

Rich: All day long. So Friend shows up. It was like, cool, another spigot.

Paul: Mmm.

Rich: Another path to really understanding where you’re at. And I was just looking back on, was it 2016 elections where they were, like, making custom ads? Like, if you’re a veteran and you had had a gun license, you got a cust—

Paul: Well, it’s the same as when you go on Facebook and they’re like, “People named Ford really understand how much Notre Dame is the best team.”

Rich: Exactly. But now we have this.

Paul: Yeah.

Rich: You don’t even need a, you don’t even need a person on the other side to make the custom ad. It’s just going to make it, right?

Paul: Well, not only that, you’re going to opt into it because the upside is so great for you.

Rich: User beware. You’re not a buyer. You don’t think you’re a buyer, you’re a user. But user beware, because we’ve seen what happens now with free data, and now this thing’s around your neck. And the necklace—what do you call the, there’s the pendant, and then the necklace?

Paul: Yeah.

Rich: Is just gonna get tighter and tighter.

Paul: It’s a strap—oh, that’s good! That’s good! It’s really good!

Rich: You see what I did there?

Paul: Yeah, that’s really good.

Rich: All right.

Paul: Yeah, until you’ve been choked by data.

Rich: You know what we don’t do here at aboard, Paul?

Paul: A lot of things.

Rich: We don’t sell junk jewelry that is selling your data!

Paul: No, we don’t. I don’t know—we’re not totally sure it’s selling your data. It’s a little opaque. But we’ll figure it all out.

Rich: I don’t know anything about you. I don’t know what your interests are.

Paul: Okay.

Rich: I have no idea where you land politically.

Paul: We’ve worked together 10 years. That’s really sad.

Rich: But we sell software.

Paul: Oh, I see where you’re going.

Rich: We sell software built with AI, which makes it, puts it into your hands much cheaper, much faster.

Paul: But wait a minute. What if AI can’t build all my software?

Rich: People.

Paul: What?

Rich: People who need people…

Paul: Are the luckiest people in the world, and that’s you as an enterprise software customer with Aboard.

Rich: To be clear, we build software for companies. It’s complicated software. Complicated software is beyond AI’s reach, and you need people to get it over the line. And we put people to work. But first, you can take a first cut by going to aboard.com and trying Aboard. It’ll give you a taste of what we’re capable of pulling off.

Paul: Delicious little snacks.

Rich: Delicious.

Paul: Yeah.

Rich: Check it out. Aboard.com and give us five stars. Thumbs up. I don’t know, what else do people measure?

Paul: Six stars if you’ve got them.

Rich: Tomatoes.

Paul: Like and subscribe.

Rich: 100—

Paul: Like and subscribe.

Rich: Good job, Paul.

Paul: All right, let’s get back to work.

Rich: Go make a friend.

Paul: Yeah, make a real friend in New York City. Come on by. We like you. We don’t want to talk to you, but we want you to visit.

Rich: Have a good week.

Paul: Bye.

[outro music]