This is where AIs go before they come out and chat with you.

Over the holiday I decided to see what it would take to code an actual application in AI. I wanted to make something pretty complex—a database-backed, component-driven web tool that calls external APIs and updates webpages in real time. I worked on it in the evenings and didn’t finish it, but for a good reason. Because I was worried it would become self-aware and take over the world! Just kidding—I am above the age of 15. I’ll explain the actual reason in a minute.

Right now, a consensus about programming with AI is forming, which breaks down to (1) LLMs write pretty good code; (2) they write too much of it and it’s buggy; (3) they tend to get backed into corners they can’t get out of. One minute you’re saying, “build me a pretty table view of this database table,” and it’s going great. The next minute, you actually have to dive in and debug and fix things yourself, like some kind of early-2024 cave-programmer.

The basic idea emerging is that you should let the AI write more tests—have it write some code that measures whether other code is working, and create a kind of virtuous loop where it’s constantly checking its own work.

Want more of this?

The Aboard Newsletter from Paul Ford and Rich Ziade: Weekly insights, emerging trends, and tips on how to navigate the world of AI, software, and your career. Every week, totally free, right in your inbox.

Here are two representative quotes. The first comes from David Crawshaw, writing about building large-ish Go programs:

What we have now is a world where the tradeoffs have shifted. It is now easier to write more comprehensive tests. You can have the LLM write the fuzz test implementation you want but didn’t have the hours to build properly. You can spend a lot more time writing tests to be readable, because the LLM is not sitting there constantly thinking “it would be better for the company if I went and picked another bug off the issue tracker than doing this.” So the tradeoff shifts in favor of having more specialized implementations.

The second is from a long article called “GDD: Generative Driven Design,” by Ethan Knox, which proposes a vision for the future of coding—note that “GDD” is a riff on “TDD,” which means “Test-Driven Design.”

In this vision, an Engineer is still very heavily involved in the mechanical processes of GDD. But it is reasonable to assume that as a codebase grows and evolves to become increasingly GenAI-able due to GDD practice, less human interaction will become necessary. In the ultimate expression of Continuous Delivery, GDD could be primarily practiced via a perpetual “GDD server.” Work will be sourced from project management tools like Jira and GitHub Issues, error logs from Datadog and CloudWatch needing investigation, and most importantly generated by the GDD tooling itself. Hundreds of PRs could be opened, reviewed, and merged every day, with experienced human engineers guiding the architectural development of the project over time. In this way, GDD can become a realization of the goal to automate automation.

If you know the history of software development, this kind of future would represent an evolution of past practices rather than a huge sea change. I think it’s highly realistic and a very sensible way to drive LLM-based speed improvements into existing codebases. You’re going to see a lot of people using an approach like this to convert the giant bank’s enormous library of COBOL code into a modern, exciting Java code. It’ll be less exhausting, because it will help you with the awful parts: Testing, documentation, formatting. In theory huge, horrible projects that always failed in the past could actually be completed. That’s exciting!

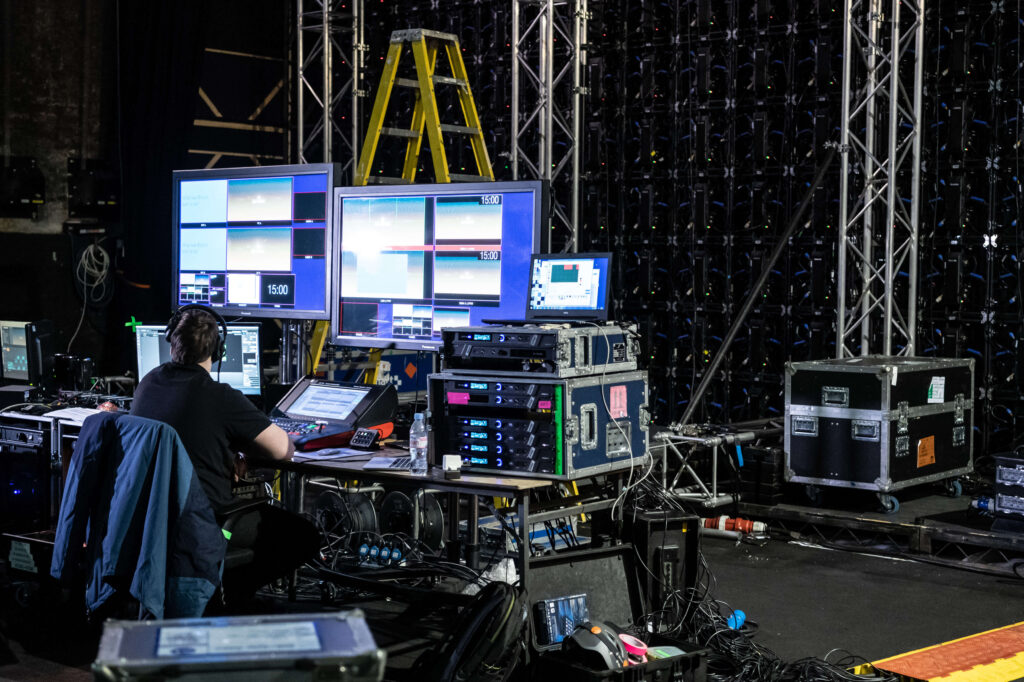

But I’ve been trying a different path. Call it Rehearsal-Driven Development. If you’ve ever performed before an audience in any way—PowerPoints count!—you know that all the work is in preparation and rehearsal. The actual performance is a side-effect of that.

The way I spent my holidays was by sitting down, feeding the AI some SQL files and a few prompts, and trying to get it to build me an app in a couple of hours. I asked it to fix its own errors a lot. When it runs into a wall or gets confused, I’ve learned, I often just give up. “Forget it!” I yell. “Erase everything and start all over again. Take it from the top!” I run a script that erases everything except the scaffolding, revise my prompts, and restart the chat.

Like I said—I think the test-driven approach is very interesting. But the reason the rehearsal-driven approach is interesting to me is it’s clear that LLMs are going to get much faster, and much cheaper. I started using a Chinese LLM called DeepSeek, which apparently cost under $6 million to train, even through they had to use crappy GPUs because we won’t let NVidia sell them the good stuff. (Incidentally, that also hints that we’re getting out of the ecological disaster zone with LLM training—we’re burning less fuel to make this stuff.)

DeepSeek is so cheap to use as an API it’s basically free. I’ve used it for ten days pretty steadily and it’s cost me about $2. It’s not as good as Claude for coding, but it’s fast and it doesn’t whine about token limits and kick me out. I want to use the cheapest, most convenient tool to build the most obvious stuff as fast as possible.

The first time I “built” a backend API it took nearly a day. But last night, I had it build me an API in ten minutes, and it was better than the first one. There’s no magic to it. You just keep learning what the system will do well and where its limits are, write that down in a big prompts file, and then coach/direct it to do it again, but better this time. The same is true of the frontend. It initially took multiple nights of messing around, but last night, I did the same work in ten minutes. I found some limits in DeepSeek and switched to Claude, and it cost me $3 instead of ten cents. Such is life.

Now I’m working on real-time connection between the API and the frontend, so I can update the user on database operations via websockets. This is the sort of thing that I never bothered with before because it’s a nightmare that always sucks, but in this case, I was able to get something that kind of worked last week—and now I’m optimistic I’ll be able to get that part down to ten minutes too.

This feels like rehearsal, like I’m building up to a performance. I write my little one-act play with lots of places for improvisation and riffing, and the computer performs it, and then I change the rules and we do it again. It’s sort of like a 1970s experimental Andre Gregory theater thing, except I’m doing it to build data management apps. I’m sure this is the future the La MaMa Experimental Theatre Club envisioned.

Why am I doing this? Because that’s how things work in 2025. I want to develop the knack. I’m also tired of San Francisco AI companies treating us like toddlers at a magic show. “Oooh…look! A picture of a kitty! On a bicycle! Who wants AGI!” It’s not a weird magic nascent intelligence. It’s a giant sloppy multilayered vector database that pattern-matches inputs then spews semi-random outputs—in other words, it’s just software. We can figure it out. Once I’m done with my boring corporate system I’m going to take the patterns and build a complex allowance tracker for my kids, with custom logins, chores tracked, parental admin rights, and so forth. It should take about an hour.